AI Risk Assessment & Bias Audits

Seeing the risks before they become real world problems

NexterLaw helps organizations uncover hidden risks in their AI systems —from legal exposure to ethical pitfalls and offers practical, expert-backed solutions to fix them.

As AI becomes more embedded in hiring, finance, healthcare, education, and everyday decision-making, it's critical to make sure these systems are safe, fair, and accountable. That’s where our risk and bias audits come in.

Our services include:

End-to-End AI Risk Assessments

We review how AI is designed, trained, deployed, and monitored flagging potential issues like non-compliance, liability gaps, or lack of explainability.

Bias & Discrimination Audit

Using legal, ethical, and statistical lenses, we check for bias in datasets, algorithms, and outcomes helping clients align with fairness standards and anti-discrimination laws.

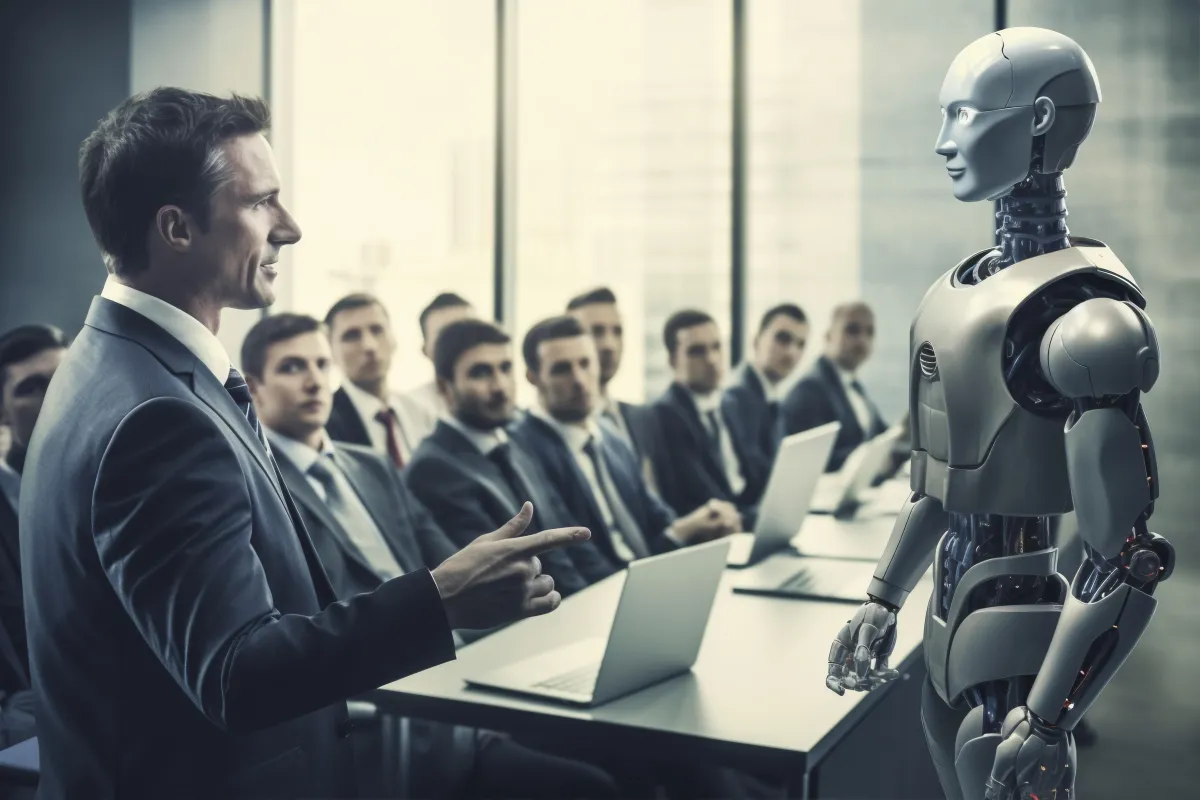

Executive & Legal Team Briefings

We translate technical audit findings into actionable insights for senior leadership, risk managers, and legal teams.

Mitigation Planning & Ethical Redesign

We don’t just highlight problems we help fix them. From improving datasets to redesigning workflows, we guide teams in making AI systems safer and more trustworthy.

Sector-Specific Compliance Check

Tailored audits for industries like HR, insurance, finance, healthcare, and education where AI risks can lead to serious human and legal consequences.

Why it matters?

Unchecked AI systems can lead to biased outcomes, reputational damage, and legal challenges. With growing regulatory scrutiny and public awareness, proactive auditing is no longer optional — it’s part of responsible innovation.

Why NexterLaw?

Our audits are led by Dr. Siamak Goudarzi an international expert in AI law, human rights, and digital ethics. His experience as a former judge, legal academic, and author of AI for Legal Professionals and Code of Respect ensures that every audit balances technical, legal, and ethical perspectives.

We don’t just assess the risks. We help you build systems you can stand behind.

To book an AI risk audit or bias assessment, Contact us: